pip install numereval

Not an official Numerai tool

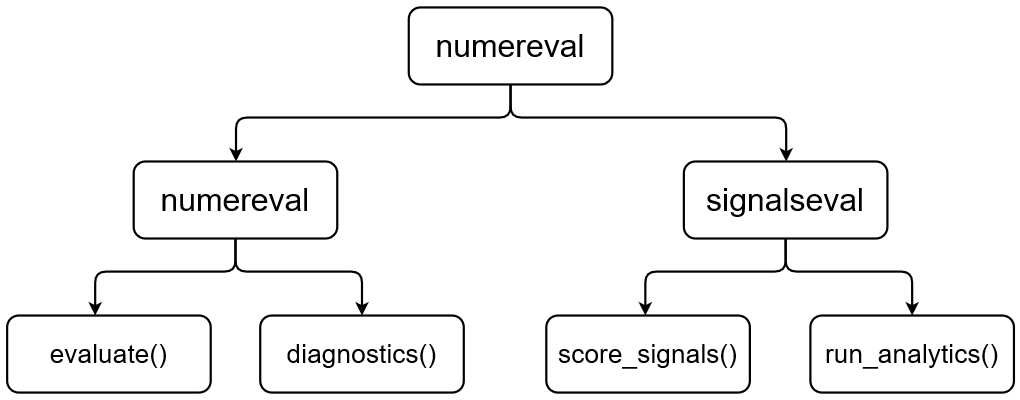

A generic function to calculate basic per-era correlation stats with optional feature exposure and plotting.

Useful for evaluating custom validation split from training data without MMC metrics and correlation with example predictions.

from numereval.numereval import evaluate

evaluate(training_data, plot=True, feature_exposure=False)

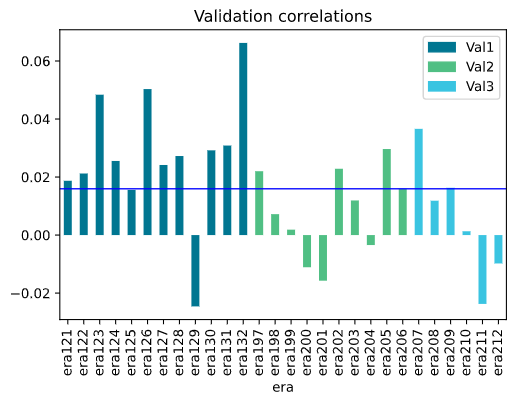

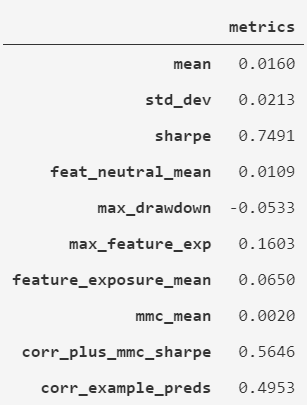

| Correlations plot | Returned metrics |

|---|---|

|

|

To reproduce the scores on diagnostics dashboard locally with optional plotting of per-era correlations.

from numereval.numereval import diagnostics

validation_data = tournament_data[tournament_data.data_type == "validation"]

diagnostics(

validation_data,

plot=True,

example_preds_loc="numerai_dataset_244\example_predictions.csv",

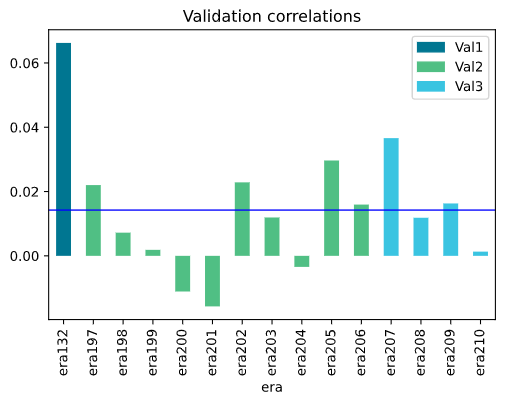

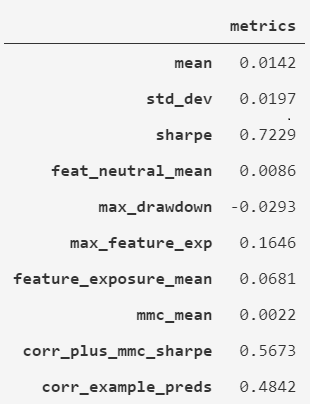

)| Validation plot | Returned metrics |

|---|---|

|

|

specify a list of eras in the format eras = ["era121", "era122", "era209"]

validation_data = tournament_data[tournament_data.data_type == "validation"]

eras = validation_data.era.unique()[11:-2]

numereval.numereval.diagnostics(

validation_data,

plot=True,

example_preds_loc="numerai_dataset_244\example_predictions.csv",

eras=eras,

)| Validation plot | Returned metrics |

|---|---|

|

|

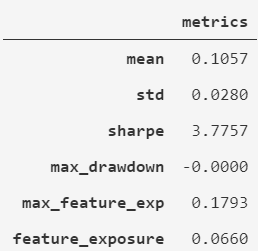

Note: Since predictions are neutralized against Numerai's internal features before scoring, results from numereval.signalseval.run_analytics() do not represent exact diagnostics and live scores.

import numereval

from numereval.signalseval import run_analytics, score_signals

#after assigning predictions

train_era_scores = train_data.groupby(train_data.index).apply(score_signals)

test_era_scores = test_data.groupby(test_data.index).apply(score_signals)

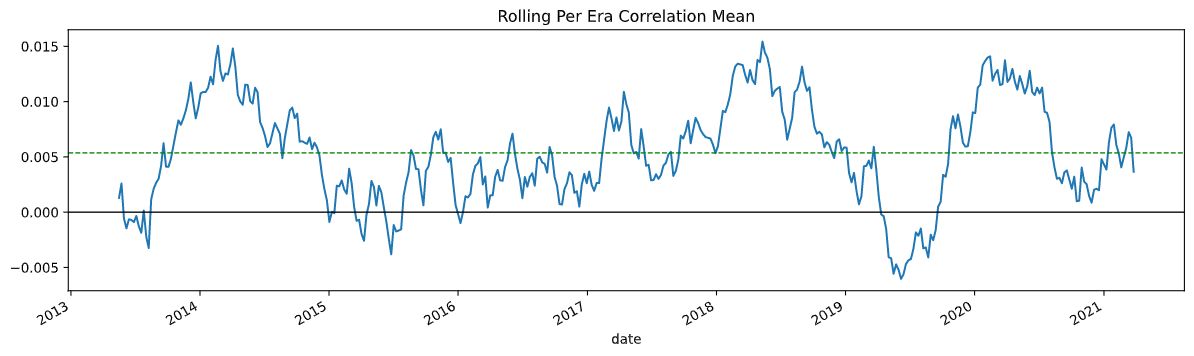

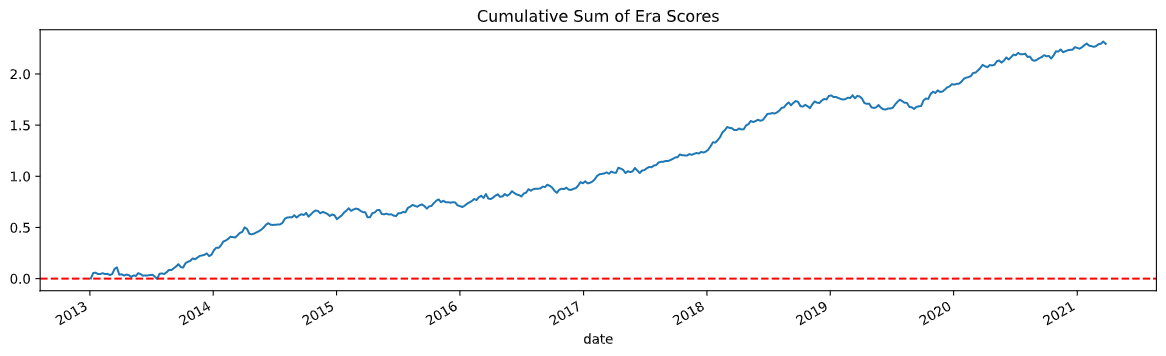

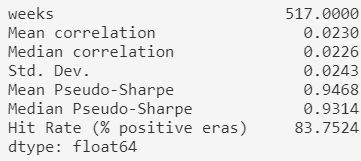

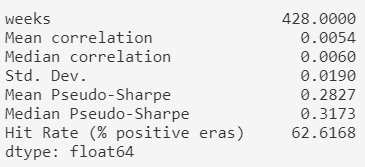

train_scores = run_analytics(train_era_scores, plot=False)

test_scores = run_analytics(test_era_scores, plot=True)| train_scores | test_scores |

|---|---|

|

|

Thanks to Jason Rosenfeld for allowing the run_analytics() to be integrated into the library.

Docs will be updated soon!