-

Notifications

You must be signed in to change notification settings - Fork 113

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

refactor code using black formatter and add dockerhub push cmd, and update base image to version containing cudnn8 #13

Open

FrostFlowerFairy

wants to merge

4

commits into

alesaccoia:main

Choose a base branch

from

TyreseDev:main

base: main

Could not load branches

Branch not found: {{ refName }}

Loading

Could not load tags

Nothing to show

Loading

Are you sure you want to change the base?

Some commits from the old base branch may be removed from the timeline,

and old review comments may become outdated.

Open

Changes from 2 commits

Commits

Show all changes

4 commits

Select commit

Hold shift + click to select a range

File filter

Filter by extension

Conversations

Failed to load comments.

Loading

Jump to

Jump to file

Failed to load files.

Loading

Diff view

Diff view

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -13,18 +13,15 @@ VoiceStreamAI is a Python 3 -based server and JavaScript client solution that en | |

| - Customizable audio chunk processing strategies. | ||

| - Support for multilingual transcription. | ||

|

|

||

| ## Demo Video | ||

| ## Demo | ||

|

|

||

| [View Demo Video](https://raw.githubusercontent.com/TyreseDev/VoiceStreamAI/main/img/voicestreamai_test.mp4) | ||

|

|

||

| https://github.com/alesaccoia/VoiceStreamAI/assets/1385023/9b5f2602-fe0b-4c9d-af9e-4662e42e23df | ||

|

|

||

| ## Demo Client | ||

|

|

||

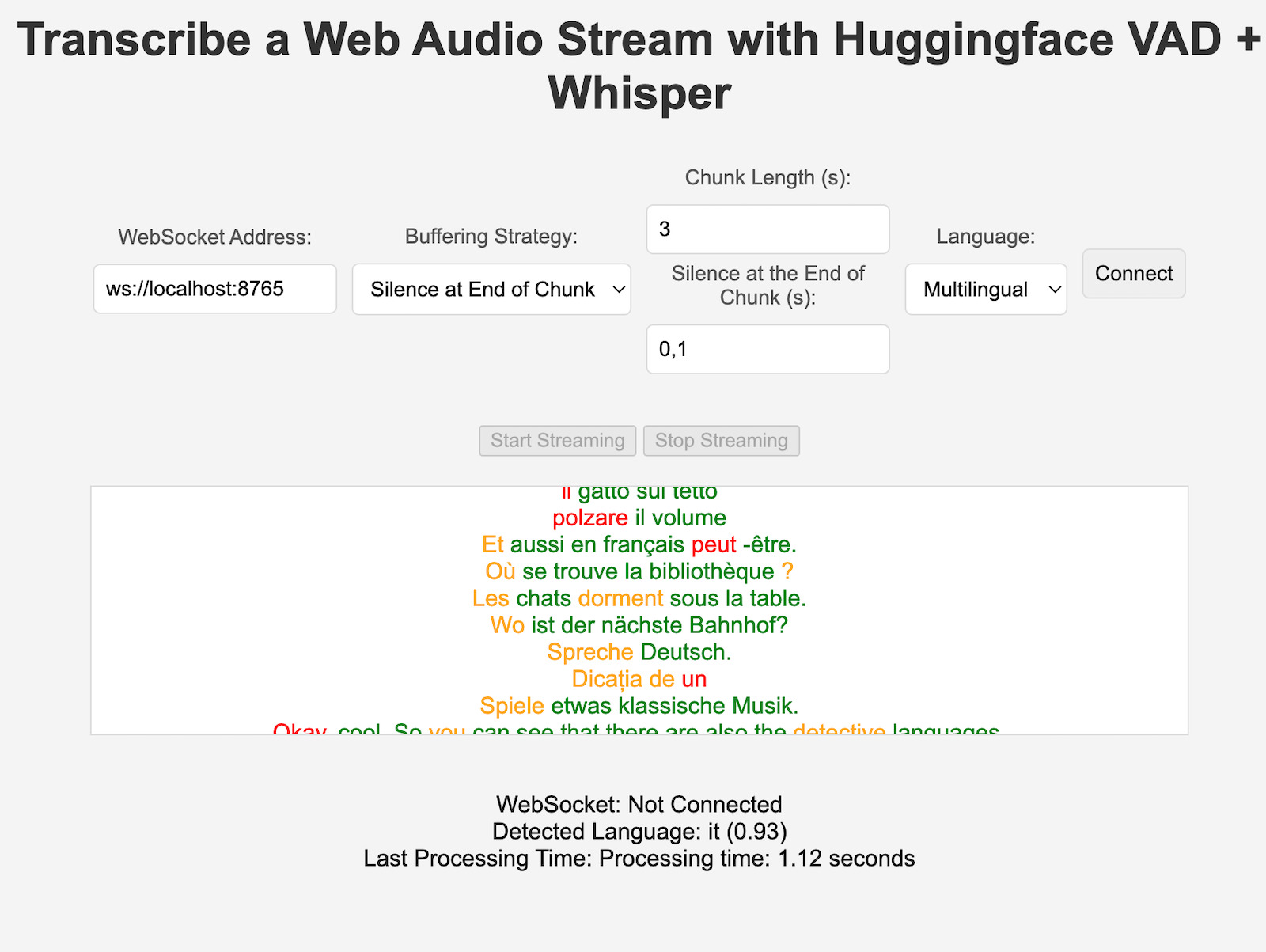

|  | ||

|  | ||

|

|

||

| ## Running with Docker | ||

|

|

||

| This will not guide you in detail on how to use CUDA in docker, see for example [here](https://medium.com/@kevinsjy997/configure-docker-to-use-local-gpu-for-training-ml-models-70980168ec9b). | ||

| This will not guide you in detail on how to use CUDA in docker, see for example [here](https://medium.com/@kevinsjy997/configure-docker-to-use-local-gpu-for-training-ml-models-70980168ec9b). | ||

|

|

||

| Still, these are the commands for Linux: | ||

|

|

||

|

|

@@ -52,13 +49,13 @@ After getting your VAD token (see next sections) run: | |

|

|

||

| sudo docker volume create huggingface_models | ||

|

|

||

| sudo docker run --gpus all -p 8765:8765 -v huggingface_models:/root/.cache/huggingface -e PYANNOTE_AUTH_TOKEN='VAD_TOKEN_HERE' voicestreamai | ||

| sudo docker run --gpus all -p 80:80 -v huggingface_models:/root/.cache/huggingface -e PYANNOTE_AUTH_TOKEN='VAD_TOKEN_HERE' voicestreamai | ||

| ``` | ||

|

|

||

| The "volume" stuff will allow you not to re-download the huggingface models each time you re-run the container. If you don't need this, just use: | ||

|

|

||

| ```bash | ||

| sudo docker run --gpus all -p 8765:8765 -e PYANNOTE_AUTH_TOKEN='VAD_TOKEN_HERE' voicestreamai | ||

| sudo docker run --gpus all -p 80:80 -e PYANNOTE_AUTH_TOKEN='VAD_TOKEN_HERE' voicestreamai | ||

| ``` | ||

|

|

||

| ## Normal, Manual Installation | ||

|

|

@@ -92,7 +89,7 @@ The VoiceStreamAI server can be customized through command line arguments, allow | |

| - `--asr-type`: Specifies the type of Automatic Speech Recognition (ASR) pipeline to use (default: `faster_whisper`). | ||

| - `--asr-args`: A JSON string containing additional arguments for the ASR pipeline (one can for example change `model_name` for whisper) | ||

| - `--host`: Sets the host address for the WebSocket server (default: `127.0.0.1`). | ||

| - `--port`: Sets the port on which the server listens (default: `8765`). | ||

| - `--port`: Sets the port on which the server listens (default: `80`). | ||

|

|

||

| For running the server with the standard configuration: | ||

|

|

||

|

|

@@ -103,7 +100,7 @@ For running the server with the standard configuration: | |

| python3 -m src.main --vad-args '{"auth_token": "vad token here"}' | ||

| ``` | ||

|

|

||

| You can see all the command line options with the command: | ||

| You can see all the command line options with the command: | ||

|

|

||

| ```bash | ||

| python3 -m src.main --help | ||

|

|

@@ -112,13 +109,12 @@ python3 -m src.main --help | |

| ## Client Usage | ||

|

|

||

| 1. Open the `client/VoiceStreamAI_Client.html` file in a web browser. | ||

| 2. Enter the WebSocket address (default is `ws://localhost:8765`). | ||

| 2. Enter the WebSocket address (default is `ws://localhost/ws`). | ||

| 3. Configure the audio chunk length and offset. See below. | ||

| 4. Select the language for transcription. | ||

| 5. Click 'Connect' to establish a WebSocket connection. | ||

| 6. Use 'Start Streaming' and 'Stop Streaming' to control audio capture. | ||

|

|

||

|

|

||

| ## Technology Overview | ||

|

|

||

| - **Python Server**: Manages WebSocket connections, processes audio streams, and handles voice activity detection and transcription. | ||

|

|

@@ -207,10 +203,8 @@ Please make sure that the end variables are in place for example for the VAD aut | |

|

|

||

| ### Dependence on Audio Files | ||

|

|

||

| Currently, VoiceStreamAI processes audio by saving chunks to files and then running these files through the models. | ||

| Currently, VoiceStreamAI processes audio by saving chunks to files and then running these files through the models. | ||

|

|

||

| ## Contributors | ||

|

|

||

| - Alessandro Saccoia - [[email protected]](mailto:[email protected]) | ||

|

|

||

| This project is open for contributions. Feel free to fork the repository and submit pull requests. | ||

This file was deleted.

Oops, something went wrong.

Oops, something went wrong.

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The Dockerfile in the current repository will lead to the following issues:

The current commit can fix this issue.