This is code for "DVI: Depth Guided Video Inpainting for Autonomous Driving". ECCV 2020.

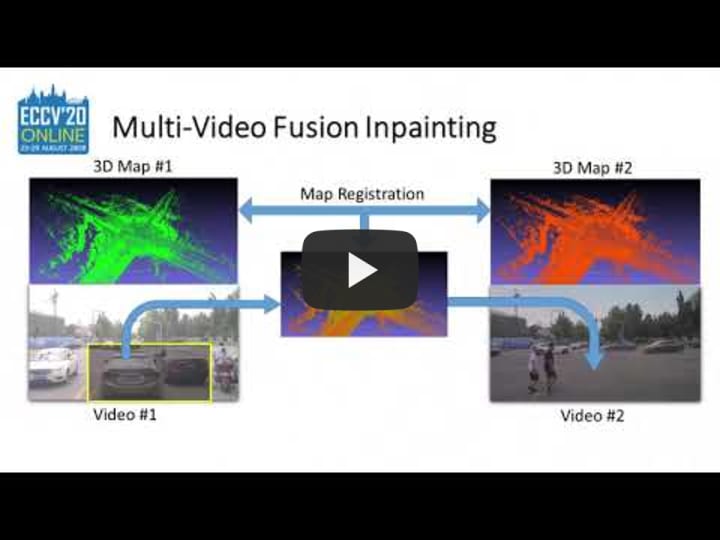

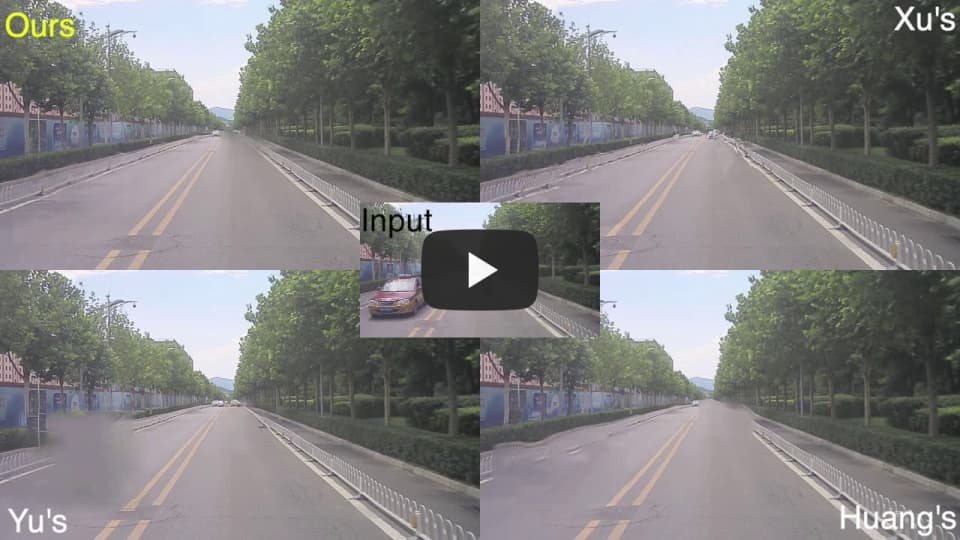

To get clear street-view and photo-realistic simulation in autonomous driving, we present an automatic video inpainting algorithm that can remove traffic agents from videos and synthesize missing regions with the guidance of depth/point cloud. By building a dense 3D map from stitched point clouds, frames within a video are geometrically correlated via this common 3D map. In order to fill a target inpainting area in a frame, it is straightforward to transform pixels from other frames into the current one with correct occlusion. Furthermore, we are able to fuse multiple videos through 3D point cloud registration, making it possible to inpaint a target video with multiple source videos.

Please download full data at Apolloscape or using link below. The first video inpainting dataset with depth.

mask_and_image_0.zip data_0.zip lidar_bg_0.zip

mask_and_image_1.zip data_1.zip lidar_bg_1.zip

mask_and_image_2.zip data_2.zip lidar_bg_2.zip

mask_and_image_3.zip data_3.zip lidar_bg_3.zip

The folder structure of the inpainting is as follows:

-

xxx-yyy_mask.zip: xxx.aaa.jpg is original image. xxx.aaa.png is labelled mask of cars.

-

xxx-yyy.zip: Data includes ds_map.ply, global_poses.txt, rel_poses.txt, xxx.aaa_optR.xml. ds_map.ply is dense map build from lidar frames.

-

lidar_bg.zip: lidar background point cloud in ply format.

catkin_ws

├── build

├── devel

├── src

code

├── libDAI-0.3.0

├── opengm

data

├── pandora_liang

├── set2

├── 1534313590-1534313597

├── 1534313590-1534313597_mask

├── 1534313590-1534313597_results

├── lidar_bg

├── ...

-

Install opengm

download OpenGM 2.3.5 at http://hciweb2.iwr.uni-heidelberg.de/opengm/index.php?l0=library

or

https://github.com/opengm/opengm for version 2.0.2

-

Build opengm with MRF:

cd code/opengm mkdir build cd build cmake -DWITH_MRF=ON .. make sudo make install -

Make catkin:

cd catkin_ws source devel/setup.bash catkin_make

cd catkin_ws

rosrun loam_velodyne videoInpaintingTexSynthFusion 1534313590 1534313597 1534313594 ../data/pandora_liang/set2

Please cite our paper in your publications if our dataset is used in your research.

DVI: Depth Guided Video Inpainting for Autonomous Driving.

Miao Liao, Feixiang Lu, Dingfu Zhou, Sibo Zhang, Wei Li, Ruigang Yang. ECCV 2020. PDF, Result Video, Presentation Video

@article{liao2020dvi,

title={DVI: Depth Guided Video Inpainting for Autonomous Driving},

author={Liao, Miao and Lu, Feixiang and Zhou, Dingfu and Zhang, Sibo and Li, Wei and Yang, Ruigang},

journal={arXiv preprint arXiv:2007.08854},

year={2020}

}

ECCV 2020 Presentation Video

Result Video

Get MRF-LIB working within opengm2:

~/code/opengm/build$ cmake -DWITH_MRF=ON .. #turn on MRF option within opengm cmake

~/code/opengm/src/external/patches/MRF$ ./patchMRF-v2.1.sh

Change to:

TRWS_URL=https://download.microsoft.com/download/6/E/D/6ED0E6CF-C06E-4D4E-9F70-C5932795CC12/

Within patchMRF-v2.1.sh