This is a forked version of "OpenFace: an open source facial behavior analysis toolkit" - https://github.com/TadasBaltrusaitis/OpenFace

For instructions of how to install/compile/use the project please see WIKI

More details about the project - http://www.cl.cam.ac.uk/research/rainbow/projects/openface/

This Versoin is adding an OSC client so the project is easier to interface with OSC based projects.

This Project allows easily create face controlled interfaces and interactive experiences. The data coming out from OpenFace is sent over the OSC protocol, making it easy to connect with many popular prototyping and interaction design platform such as Processing, TouchDsigner, Max/MSP, vvvv, Arduino, ect.

Using OSC also allows the data to be sent to a remote server, thus allowing using OpenFace to control remote applications and hardware.

The OSC client is currently incorporated into the "FaceLandmarkVid.cpp" Project.

The face landmarks, gaze and pose data is sent to localhost "127.0.0.1" over port 6448.

General face landmarks:

- "/openFace/faceLandmarks"

Eyes landmarks:

- "/openFace/rightEye"

- "/openFace/leftEye"

Gaze vectors:

- "/openFace/gazeVectorR"

- "/openFace/gazeVectorL"

Head Pose (Position and Anlge):

- "/openFace/headPose"

Face action units:

- "/openFace/ActionUnits"

The Face Action Units extraction is a key feature in OpenFace which makes it a great tool for live emotion analysis.

The "/openFace/ActionUnits" channel transmits 17 values, which represent Action Units: 1, 2, 4, 5, 6, 7, 9, 10, 12, 14, 15, 17, 20, 23, 25, 26, and 45. The values range is: 0 (not present), 1 (present at minimum intensity), 5 (present at maximum intensity).

You can find more information about AUs and how to use them to analyse emotion here and here More information about how OpenFace handles the Action Units can be found here

You can use OSCdata monitor for an easy data peview (make sure to add port 6448): https://www.kasperkamperman.com/blog/osc-datamonitor/

You can find examples of using the data on different platforms in: "./osc_examples"

If visual studio throws an import error, make sure the project settings match the screenshots in the "./osc_settings" folder

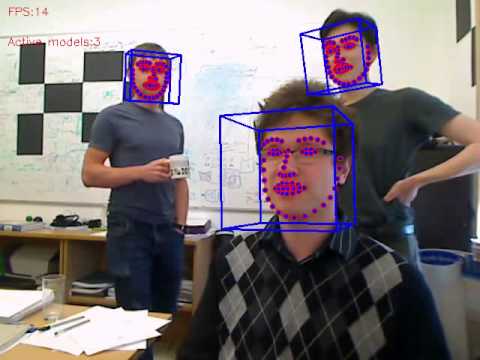

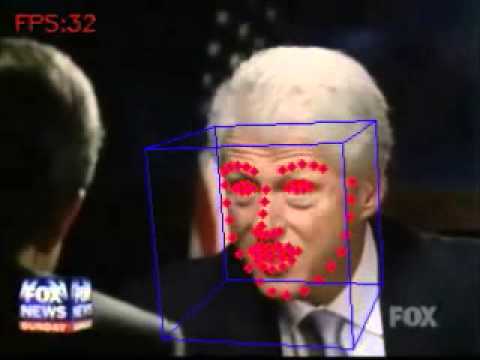

The system is capable of performing a number of facial analysis tasks:

- Facial Landmark Detection

- Facial Landmark and head pose tracking (links to YouTube videos)

- Facial Action Unit Recognition

- Gaze tracking (image of it in action)

- Facial Feature Extraction (aligned faces and HOG features)

Constrained Local Neural Fields for robust facial landmark detection in the wild Tadas Baltrušaitis, Peter Robinson, and Louis-Philippe Morency. in IEEE Int. Conference on Computer Vision Workshops, 300 Faces in-the-Wild Challenge, 2013.

Rendering of Eyes for Eye-Shape Registration and Gaze Estimation Erroll Wood, Tadas Baltrušaitis, Xucong Zhang, Yusuke Sugano, Peter Robinson, and Andreas Bulling in IEEE International. Conference on Computer Vision (ICCV), 2015

Cross-dataset learning and person-specific normalisation for automatic Action Unit detection Tadas Baltrušaitis, Marwa Mahmoud, and Peter Robinson in Facial Expression Recognition and Analysis Challenge, IEEE International Conference on Automatic Face and Gesture Recognition, 2015

Copyright can be found in the Copyright.txt

You have to respect boost, TBB, dlib, and OpenCV licenses.

For inquiries about the commercial licensing of the OpenFace toolkit please contact [email protected]

I did my best to make sure that the code runs out of the box but there are always issues and I would be grateful for your understanding that this is research code and not full fledged product. However, if you encounter any problems/bugs/issues please contact me on github or by emailing me at [email protected] for any bug reports/questions/suggestions.