Spark On Kubernetes via helm chart

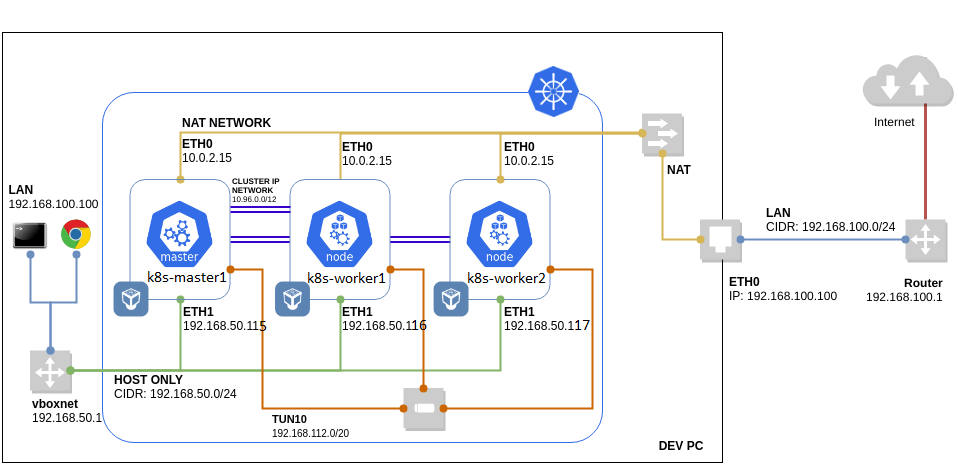

The control-plane & worker nodes addresses are :

192.168.56.115

192.168.56.116

192.168.56.117

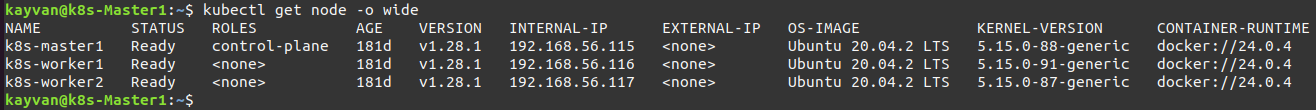

Kubernetes cluster nodes :

you can install helm via the link helm :

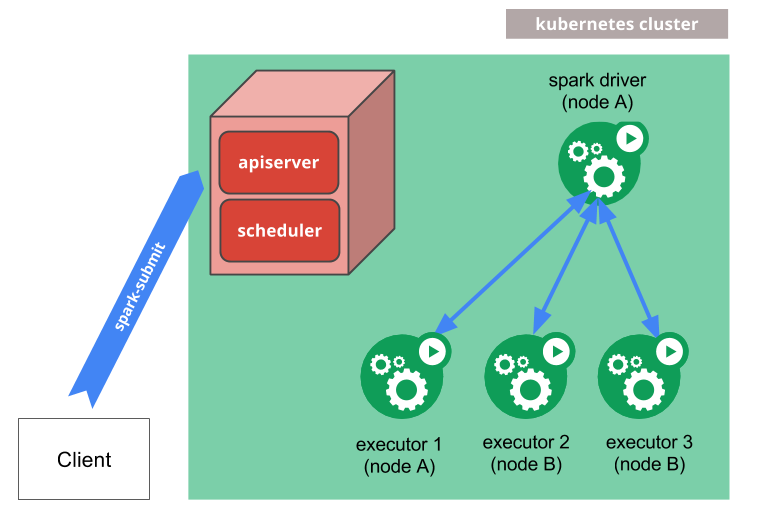

The Steps :

- Install spark via helm chart (bitnami) :

$ helm repo add bitnami https://charts.bitnami.com/bitnami

$ helm search repo bitnami

$ helm install kayvan-release oci://registry-1.docker.io/bitnamicharts/spark

$ helm upgrade kayvan-release bitnami/spark --set worker.replicaCount=5

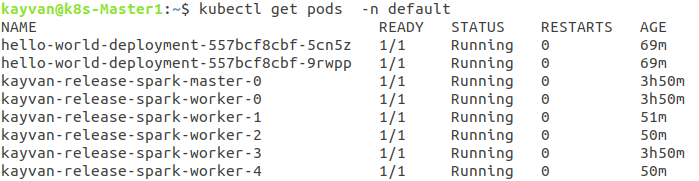

the installed 6 pods :

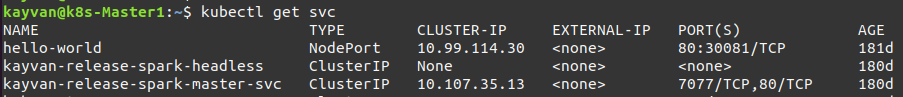

and Services (headless for statefull) :

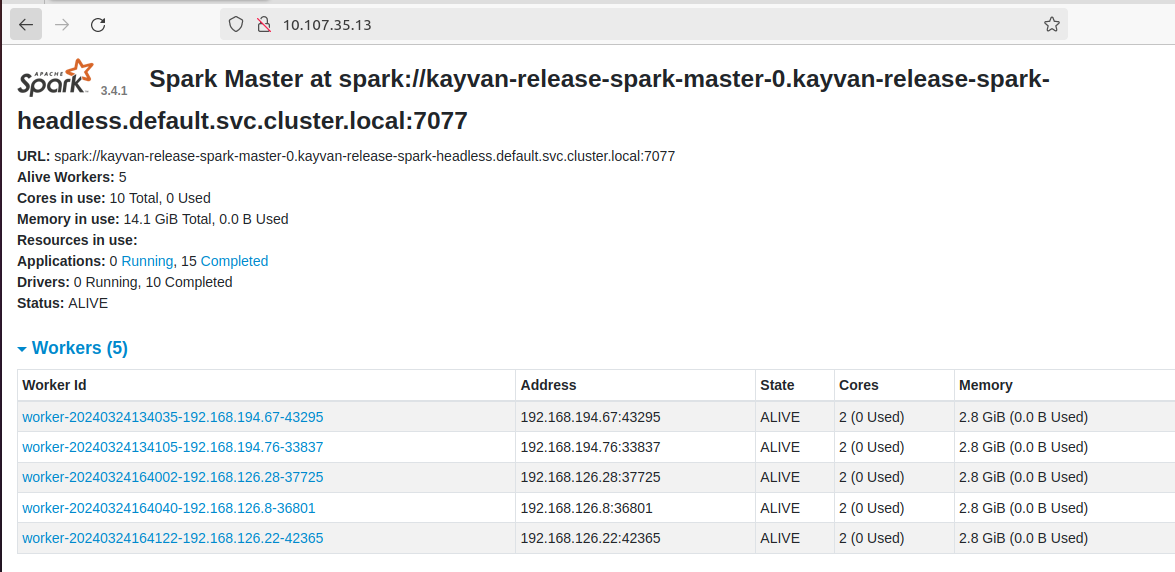

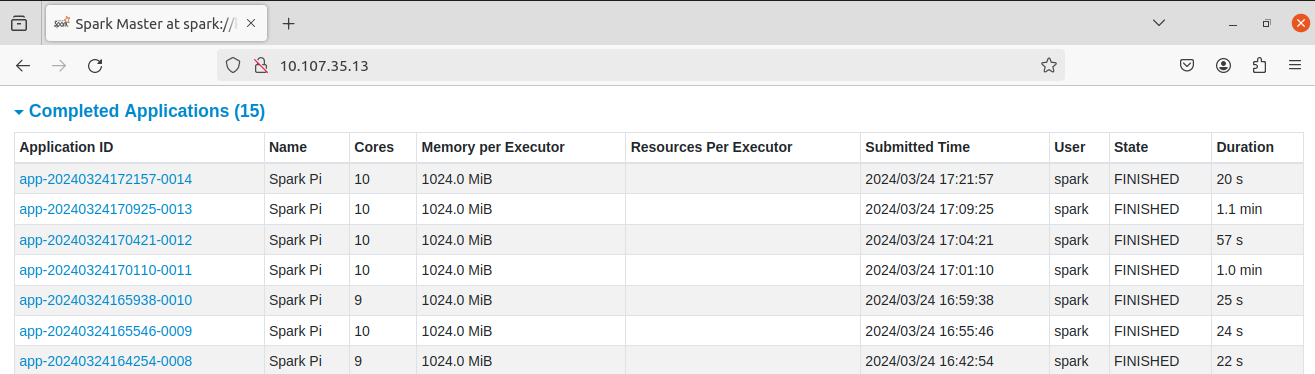

and the spark master ui is :

- type the below commands on kubernetes kube-apiserver :

kubectl exec -it kayvan-release-spark-master-0 -- ./bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master spark://kayvan-release-spark-master-0.kayvan-release-spark-headless.default.svc.cluster.local:7077 \

./examples/jars/spark-examples_2.12-3.4.1.jar 1000

or

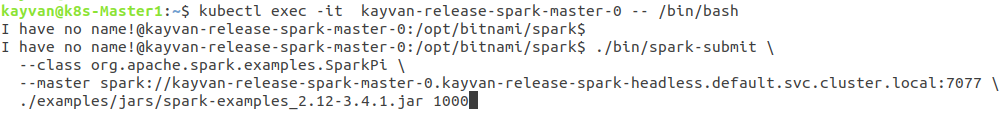

kubectl exec -it kayvan-release-spark-master-0 -- /bin/bash

./bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master spark://kayvan-release-spark-master-0.kayvan-release-spark-headless.default.svc.cluster.local:7077 \

./examples/jars/spark-examples_2.12-3.4.1.jar 1000

./bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master spark://kayvan-release-spark-master-0.kayvan-release-spark-headless.default.svc.cluster.local:7077 \

./examples/src/main/python/pi.py 1000

./bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master spark://kayvan-release-spark-master-0.kayvan-release-spark-headless.default.svc.cluster.local:7077 \

./examples/src/main/python/wordcount.py //filepath

the exact scala & python code of spark-examples_2.12-3.4.1.jar , pi.py & wordcount.py :

examples/src/main/scala/org/apache/spark/examples/SparkPi.scala

examples/src/main/python/pi.py

examples/src/main/python/wordcount.py

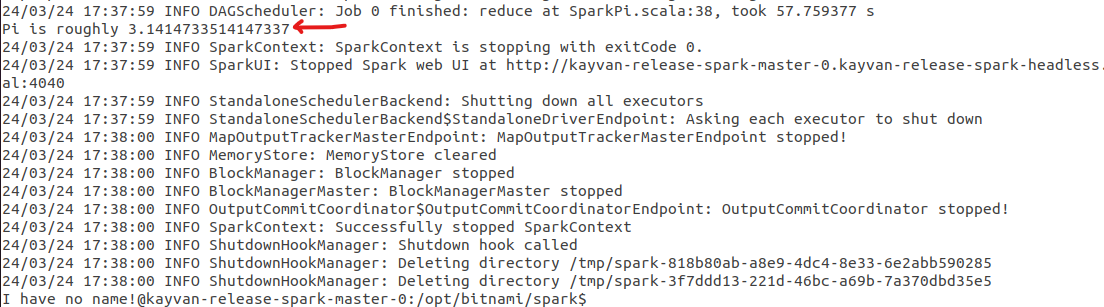

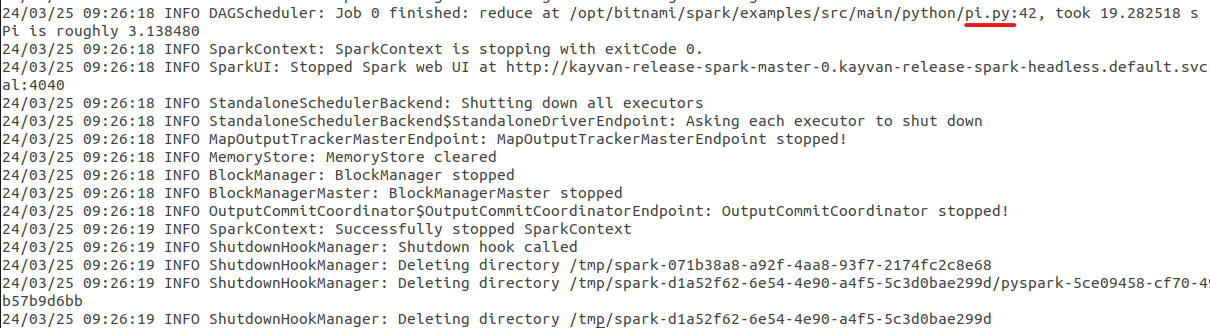

- The final result is 🍹 :

for scala :

for python :

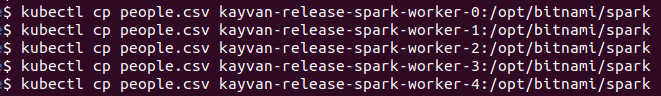

- Copy People.csv (large file) inside spark worker pods :

kubectl cp people.csv kayvan-release-spark-worker-{x}:/opt/bitnami/spark

Notes:

- you can download the file from link

- you can also use a nfs share folder for read large csv file from it instead of copying it inside pods.

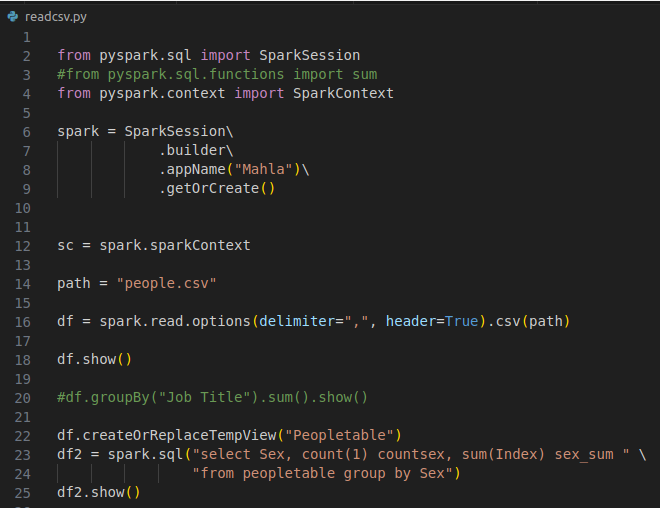

- Write some python codes inside readcsv.py please :

from pyspark.sql import SparkSession

#from pyspark.sql.functions import sum

from pyspark.context import SparkContext

spark = SparkSession\

.builder\

.appName("Mahla")\

.getOrCreate()

sc = spark.sparkContext

path = "people.csv"

df = spark.read.options(delimiter=",", header=True).csv(path)

df.show()

#df.groupBy("Job Title").sum().show()

df.createOrReplaceTempView("Peopletable")

df2 = spark.sql("select Sex, count(1) countsex, sum(Index) sex_sum " \

"from peopletable group by Sex")

df2.show()

#df.select(sum(df.Index)).show()- copy readcsv.py file inside spark master pod :

kubectl cp readcsv.py kayvan-release-spark-master-0:/opt/bitnami/spark

- run the code :

kubectl exec -it kayvan-release-spark-master-0 -- ./bin/spark-submit --class org.apache.spark.examples.SparkPi

--master spark://kayvan-release-spark-master-0.kayvan-release-spark-headless.default.svc.cluster.local:7077

readcsv.py

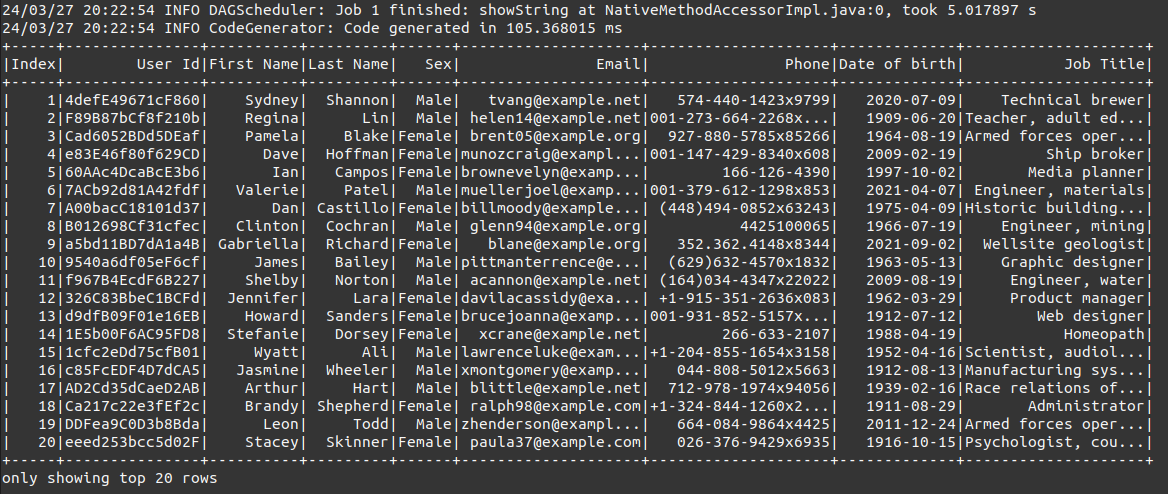

- showing some data :

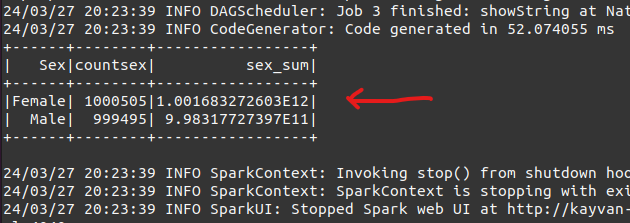

- the next result data:

- the time consuming for processing :

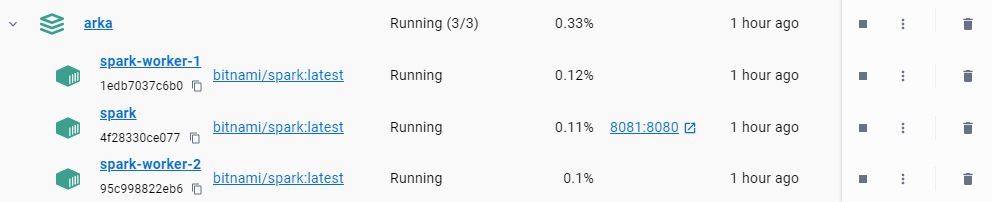

The other python Programm on Docker Desktop :

docker-compose.yml :

version: '3.6'

services:

spark:

container_name: spark

image: bitnami/spark:latest

environment:

- SPARK_MODE=master

- SPARK_RPC_AUTHENTICATION_ENABLED=no

- SPARK_RPC_ENCRYPTION_ENABLED=no

- SPARK_LOCAL_STORAGE_ENCRYPTION_ENABLED=no

- SPARK_SSL_ENABLED=no

- SPARK_USER=root

- PYSPARK_PYTHON=/opt/bitnami/python/bin/python3

ports:

- 127.0.0.1:8081:8080

spark-worker:

image: bitnami/spark:latest

environment:

- SPARK_MODE=worker

- SPARK_MASTER_URL=spark://spark:7077

- SPARK_WORKER_MEMORY=2G

- SPARK_WORKER_CORES=2

- SPARK_RPC_AUTHENTICATION_ENABLED=no

- SPARK_RPC_ENCRYPTION_ENABLED=no

- SPARK_LOCAL_STORAGE_ENCRYPTION_ENABLED=no

- SPARK_SSL_ENABLED=no

- SPARK_USER=root

- PYSPARK_PYTHON=/opt/bitnami/python/bin/python3docker-compose up --scale spark-worker=2

copy required files to containers :

for e.g.

docker cp file.csv spark-worker-1:/opt/bitnami/sparkpython code on master :

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName("Writingjson").getOrCreate()

df = spark.read.option("header", True).csv("csv/file.csv").coalesce(2)

df.show()

df.write.partitionBy('name').mode('overwrite').format('json').save('file_name.json')run the code on spark master docker container :

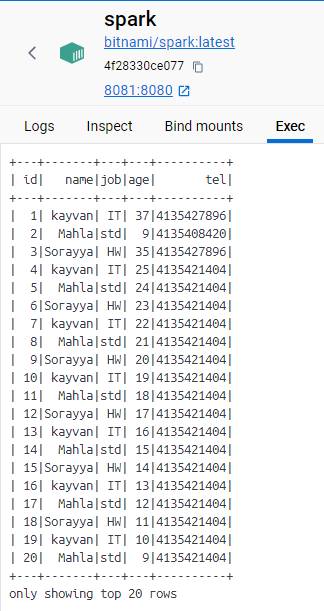

./bin/spark-submit --master spark://4f28330ce077:7077 csv/ctp.pyshowing some data :

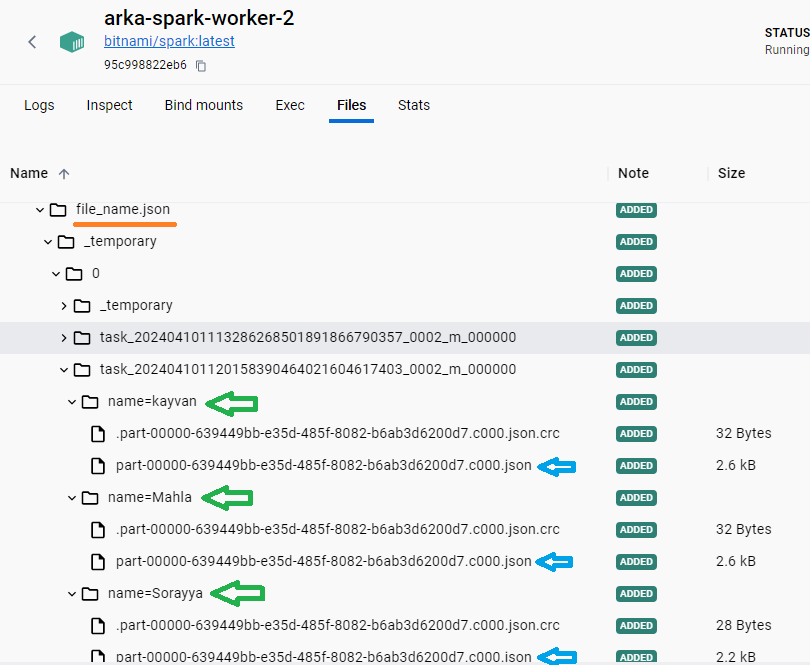

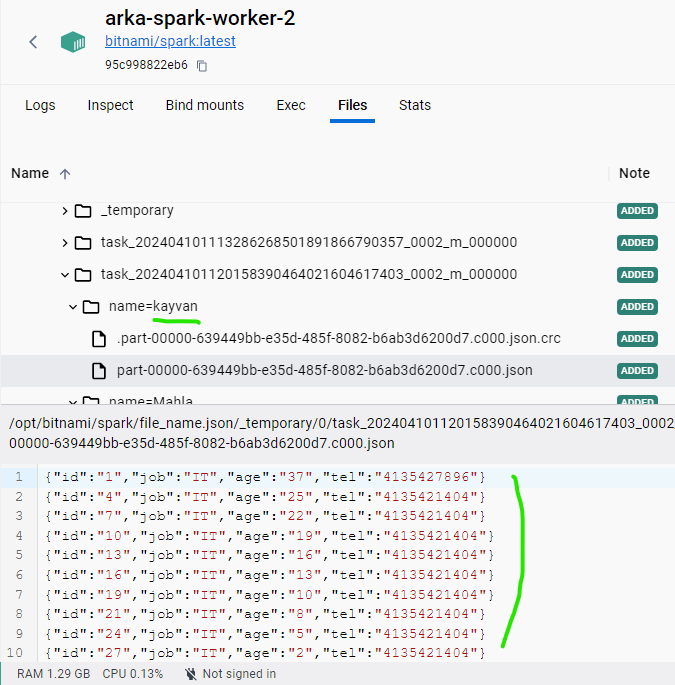

and the seperated json files based on name partitioning :

data for name=kayvan :